This page is generated by Machine Translation from Japanese.

Overview

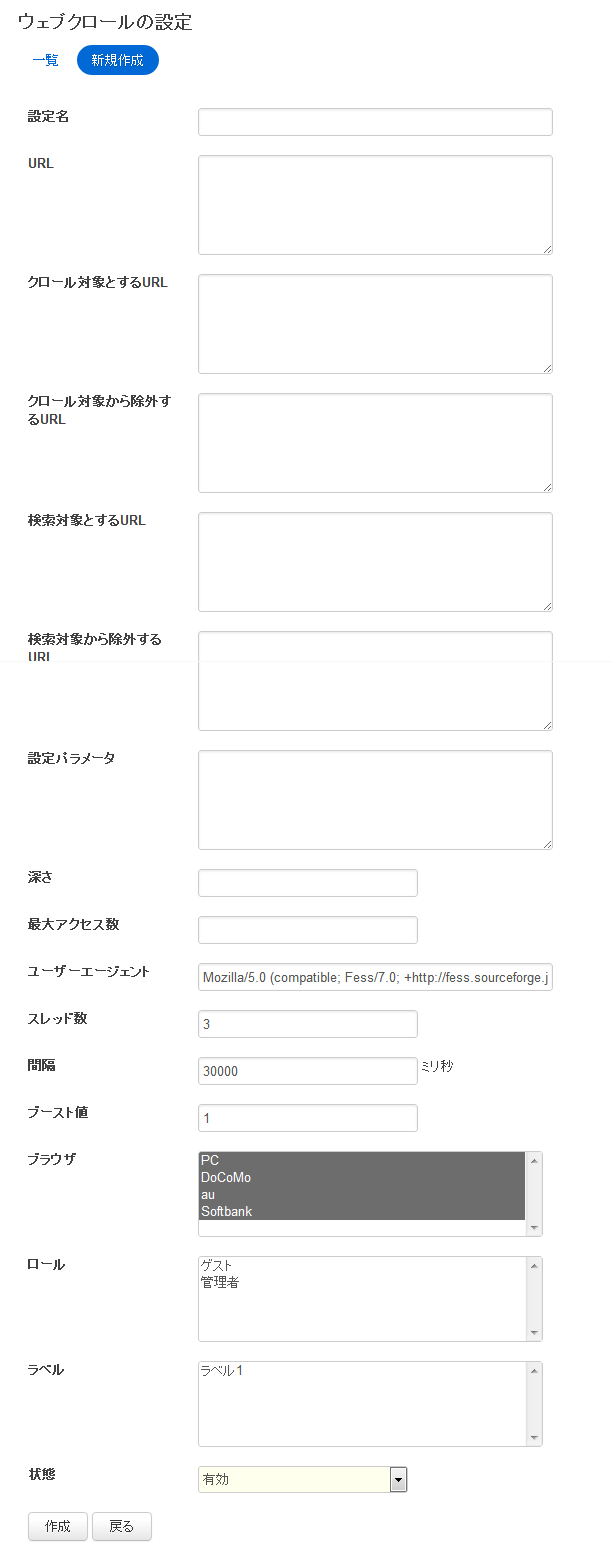

Describes the settings here, using Web crawling.

Recommends that if you want to index document number 100000 over in Fess crawl settings for one to several tens of thousands of these. One crawl setting a target number 100000 from the indexed performance degrades.

How to set up

How to display

In Administrator account after logging in, click menu Web.

Setting item

Setting name

Is the name that appears on the list page.

Specify a URL

You can specify multiple URLs. http: or https: in the specify starting. For example,

http://localhost/

http://localhost:8080/

The so determines.

URL filtering

By specifying regular expressions you can exclude the crawl and search for specific URL pattern.

| URL to crawl | Crawl the URL for the specified regular expression. |

| Excluded from the crawl URL | The URL for the specified regular expression does not crawl. The URL to crawl, even WINS here. |

| To search for URL | The URL for the specified regular expression search. Even if specified and the URL to the search excluded WINS here. |

| To exclude from the search URL | URL for the specified regular expression search. Unable to search all links since they exclude from being crawled and crawled when the search and not just some. |

Table: URL filtering contents list

For example, http: URL to crawl if not crawl //localhost/ less than the

http://localhost/.*

Also be excluded if the extension of png want to exclude from the URL

.*\.png$

It specifies. It is possible to specify multiple in the line for.

Setting parameters

You can specify the crawl configuration information.

Depth

That will follow the links contained in the document in the crawl order can specify the tracing depth.

Maximum access

You can specify the number of documents to retrieve crawl. If you do not specify people per 100,000.

User agent

You can specify the user agent to use when crawling.

Number of threads

Specifies the number of threads you want to crawl. Value of 5 in 5 threads crawling the website at the same time.

Interval

Is the interval (in milliseconds) to crawl documents. 5000 when one thread is 5 seconds at intervals Gets the document.

Number of threads, 5 pieces, will be to go to and get the 5 documents per second between when 1000 millisecond interval,. Set the adequate value when crawling a website to the Web server, the load would not load.

Boost value

You can search URL in this crawl setting to weight. Available in the search results on other than you want to. The standard is 1. Priority higher values, will be displayed at the top of the search results. If you want to see results other than absolutely in favor, including 10,000 sufficiently large value.

Values that can be specified is an integer greater than 0. This value is used as the boost value when adding documents to Solr.

Browser type

Register the browser type was selected as the crawled documents. Even if you select only the PC search on your mobile device not appear in results. If you want to see only specific mobile devices also available.

Roll

You can control only when a particular user role can appear in search results. You must roll a set before you. For example, available by the user in the system requires a login, such as portal servers, search results out if you want.

Label

You can label with search results. Search on each label, such as enable, in the search screen, specify the label.

State

Crawl crawl time, is set to enable. If you want to avoid crawling temporarily available.

Other

Sitemap

Fess and crawls sitemap file, as defined in the URL to crawl. Sitemaphttp://www.sitemaps.org/ Of the specification. Available formats are XML Sitemaps and XML Sitemaps Index the text (URL line written in)

Site map the specified URL. Sitemap is a XML files and XML files for text, when crawling that URL of ordinary or cannot distinguish between what a sitemap. Because the file name is sitemap.*.xml, sitemap.*.gz, sitemap.*txt in the default URL as a Sitemap handles (in webapps/fess/WEB-INF/classes/s2robot_rule.dicon can be customized).

Crawls sitemap file to crawl the HTML file links will crawl the following URL in the next crawl.